| PREVIOUS PRESENTATION | BACK TO PROGRAM OVERVIEW | NEXT PRESENTATION |

Precision 2D/3D Imaging and PointNet++ Based Object Classification of Concealed Objects using an FMCW Millimeter-Wave Radar

Yaheng Wang, Jie Su, Hironaru Murakami and Masayoshi Tonouchi

Institute of Laser Engineering, Osaka University, Osaka, Japan

Millimeter waves (MMWs), electromagnetic waves with a frequency range between 30-300 GHz, have garnered considerable attention in scientific research due to their characteristics such as high penetration, harmlessness to the human body, and robust weather resistance. These advantages have driven the development of various applications, including automotive radar [1], non-destructive testing [2], and hazardous material detection for security screening [3]. In these domains, the integration of artificial intelligence (AI) technology has emerged as a crucial breakthrough, enabling the automatic classification of objects in the point cloud obtained by MMW imaging. However, the deep learning research on object classification using the MMW point clouds is relatively limited at present. This limitation mainly stems from the low accuracy of the point cloud obtained after MMW imaging data processing, which is unable to accurately reconstruct the contours of objects with the required accuracy [4]. Consequently, the accuracy of deep learning in this case is comparatively lower. Therefore, the objective of this study is to develop a data processing technique that can accurately obtain high-precision three-dimensional (3D) information on concealed objects obtained by MMW imaging. Meanwhile, combine the deep learning techniques to achieve automatic object classification by observing the features of concealed objects in MMW imaging.

This study utilized a multiple-input multiple-output synthetic aperture radar (MIMO-SAR) technology employing a frequency-modulated continuous wave (FMCW) millimeter-wave MIMO radar for 2D and 3D imaging. The IWR1443 module was mounted on a 2-axis mechanical stage for X-Z scanning, with a chirp signal duration of 40 μs in the 77-81 GHz [5]. Analyzing IF signals from transmitted and received chirps produced Range FFT spectra, offering distance and reflection intensity information based on frequency. For 2D reconstruction, we utilized cross-sectional imaging at a specific distance. In 3D imaging, a three-step noise reduction method was developed: clustering data for similarity, monolayering the point cloud, and applying 3D averaging to the monolayered data.

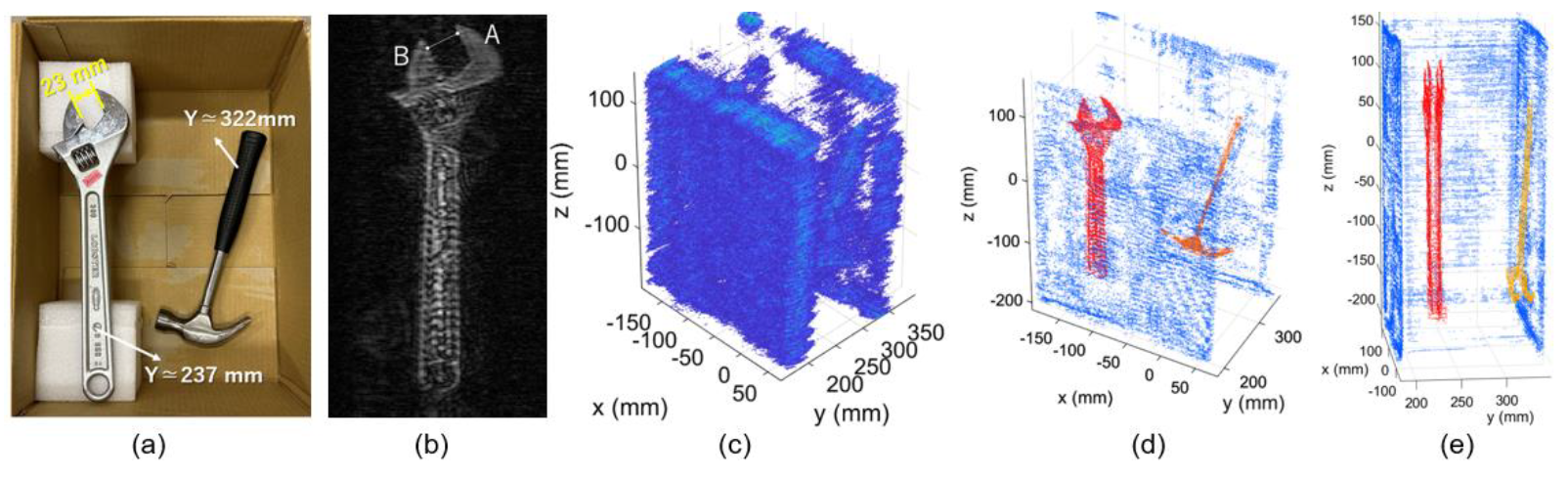

To assess the MMW imaging system’s performance and data processing algorithm accuracy, Fig.1 (a) shows a wrench and hammer enclosed in a cardboard box as imaging samples. The wrench and hammer were approximately Y=235±5 mm and Y=320±5 mm from the MMW module. A 23 mm open space between the wrench jaws was confirmed through 2D cross-sectional images at Y=237 mm (Fig. 1 (b)). The calculated distance between points A and B was 23.5 mm, slightly exceeding the actual 23 mm measured with calipers, indicating a measurement accuracy in the X-Z plane of less than 1 mm with our MIMO-SAR imaging. The front view of the 3D point clouds after extracted peak intensity, as shown in Fig.1 (c), and Fig. 1(d) showcased the accurate reconstruction of the wrench and hammer in 3D space, demonstrating effective noise elimination with our developed data processing techniques. Furthermore, we conducted object scanning from four distinct directions at 90-degree intervals. The acquired data was reconstructed, and results from each direction were stitched, creating a side view (Fig. 1 (e)). The determined wrench thickness of 15.84 mm closely matches the actual 15 mm, indicating the employed data processing algorithm achieves a distance resolution accuracy of less than 1 mm in the processed 3D point cloud.

Figure 1: (a) Invisible wrench and hammer packed in a cardboard box. (b) 2D cross-sectional images at Y = 237 mm. (c) 3D point cloud image after extracted peak intensity. (d) Reconstructed 3D point cloud image in one direction. (e) Reconstructed 3D point cloud image in four directions.

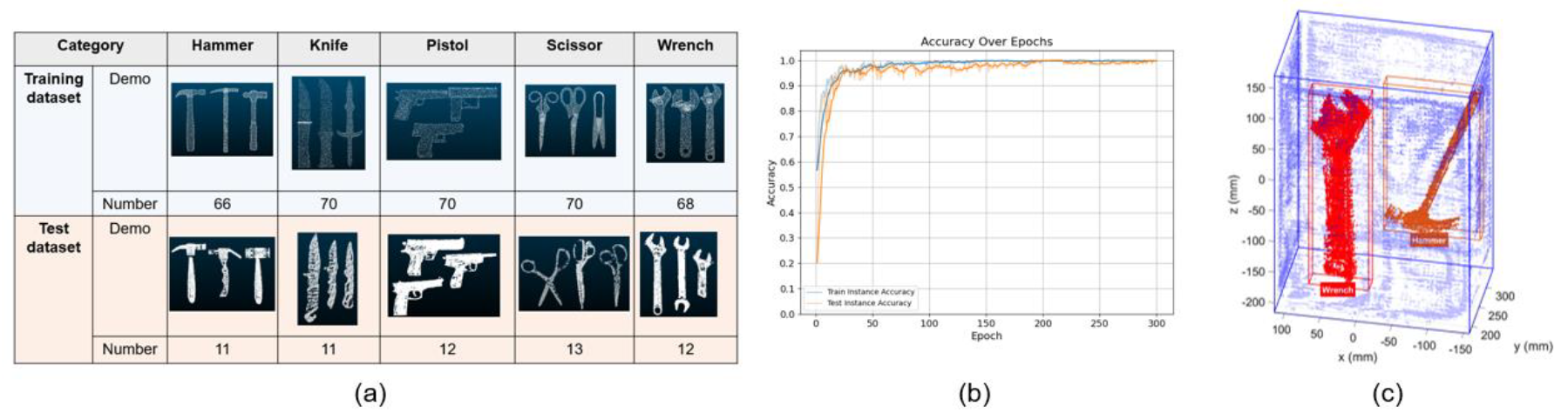

With the successful acquisition of high-precision data using MMW radar, object classification using deep learning becomes feasible. To effectively classify high-precision MMW point clouds, we employ PointNet++, a deep learning method proposed by Qi and colleagues at Stanford University in 2017 [6]. Despite the disordered and disconnected properties of point clouds, the PointNet++ method can simplify and extract complex point cloud features. Due to insufficient samples, five categories of objects downloaded from the Internet were used as a training set, and a test set was obtained by scanning five categories of real objects with the MMW imaging system and reconstructing the 3D point cloud data as described above, the results and sample volume are represented in Fig. 2(a). However, to prepare a more flexible training set that contains both richly shaped samples and the special point cloud characteristics acquired by MMW system, point cloud data from the original test set were rotated, mirrored, shifted, scaled, and added as homomorphic data to the original training set. After 300 epochs of training with 8192 sampling points, the accuracy rate of the training set reaches 99.8% and the accuracy rate of the test set is 99.6%, as shown in Fig. 2(b). To evaluate the training results of the neural network, the point cloud test set of 59 samples after scaling, mirroring, and shifting is used as the input for the evaluation set with 100% accuracy. Finally, Fig. 2(c) shows an application example where the trained object classification model is applied to the MMW point cloud data of the previously mentioned four-side scanning concealed objects. The classification results of the concealed objects are shown under the box, and the concealed objects are all correctly classified, which verifies the effectiveness of the method in practical applications.

Figure 2: (a) Schematics of the training and test datasets. (b) Accuracy over epochs. (c) Classification results of concealed objects.

In summary, this study established a MIMO-SAR FMCW MMW radar imaging system and developed a technique for precise 2D and 3D reconstruction using observed point cloud data. By successfully mitigating noise in smooth surface 3D point cloud data, we achieved a measurement accuracy of less than 1 mm. The acquired high-precision data facilitated the application of deep learning for object classification. Utilizing PointNet++ for point cloud classification, our model demonstrated an accuracy of 99.8% on the training set and 99.6% on the test set. When applied to real-world scenarios, the trained model accurately classified concealed objects in four-sided scans, confirming its practical efficacy.

References

[1] S. M. Patole, M. Torlak, D. Wang, and M. Ali, “Automotive radars: A review of signal processing techniques,” IEEE Signal Processing Magazine 34(2), 22–35 (2017)

[2] Y. Wang, L. Yi, M. Tonouchi, and T. Nagatsuma, “High-Speed 600 GHz-Band Terahertz Imaging Scanner System with Enhanced Focal Depth,” Photonics 9(12), 913 (2022)

[3] Y. Wang, J. Su, T. Fukuda, Masayoshi Tonouchi, and H. Murakami, “Precise 2D and 3D Fluoroscopic Imaging by Using an FMCW Millimeter-Wave Radar,” IEEE Access 11, 84027–84034 2023)

[4] J. Bai, L. Zheng, S. Li, B. Tan, S. Chen, and L. Huang, “Radar Transformer: An Object Classification Network Based on 4D MMW Imaging Radar,” Sensors 21(11), 3854 (2021)

[5] M. E. Yanik, D. Wang, and M. Torlak, “Development and Demonstration of MIMO-SAR mmWave Imaging Testbeds,” IEEE Access 8, 126019–126038 (2020)

[6] C. R. Qi, et al. “Pointnet++: Deep hierarchical feature learning on point sets in a metric space.” Advances in neural information processing systems 30, 5105–5114 (2017)